Best Graphics Cards 2024 – Top Gaming GPUs for the Money

The best graphics cards are the beating heart of any gaming PC, and everything else comes second. Without a powerful GPU pushing pixels, even the fastest CPU won’t manage much. While no one graphics card will be right for everyone, we’ll provide options for every budget and mindset below. Whether you’re after the fastest graphics card, the best value, or the best card at a given price, we’ve got you covered.

Where our GPU benchmarks hierarchy ranks all of the cards based purely on performance, our list of the best graphics cards looks at the whole package. Current GPU pricing, performance, features, efficiency, and availability are all important, though the weighting becomes more subjective. Factoring in all of those aspects, these are the best graphics cards that are currently available.

The only ‘new’ GPU to launch in February was the RX 7900 GRE, joining the four GPUs that came out in January: RTX 4080 Super, RTX 4070 Ti Super, RTX 4070 Super, and RX 7600 XT. All of these are in our performance charts, and some make our overall picks, replacing former entries that are now discontinued. Barring a surprise announcement in the coming months, this should be the end of new models from AMD and Nvidia until the future Blackwell and RDNA 4 GPUs arrive — most likely in 2025.

There’s also the “made for China” RTX 4090 D, which isn’t easy to find in the U.S. and tends to cost just as much as the faster non-D model, so we’ll skip that. The 7900 GRE has become a worldwide part, so perhaps the 4090 D will eventually follow suit. We’re primarily a U.S. and western-focused site, however, so we’ll confine our discussion to cards available in the U.S. market.

Intel’s Arc Alchemist GPUs rate more as previous generation hardware, as they’re manufactured on TSMC N6 and compete more directly against the RTX 3060 and RX 6700 10GB instead of newer parts. The Arc A750 periodically falls below $200 (we’ve seen it sell for as little as $179) and remains a competitive option, if you don’t mind the occasional driver snafus and higher power use.

Swipe to scroll horizontally

Note: We’re showing current online prices alongside the official launch MSRPs in the above table, with the GPUs sorted by performance. Retail prices can fluctuate quite a bit over the course of a month; the table lists the best we could find at the time of writing.

The above list shows all the current generation graphics cards, grouped by vendor and sorted by reverse product name — so faster and more expensive cards are at the top of the chart. There are 22 current generation parts, and most of them are viable choices for the right situation. Previous generation cards are mostly not worth considering these days, as they’re mostly discontinued and being phased out. If you want to see how the latest GPUs stack up in comparison to older parts, check our GPU benchmarks hierarchy.

The performance ranking incorporates 15 games from our latest test suite, with both rasterization and ray tracing performance included. Note that we are not including upscaling results in the table, which would generally skew things more in favor of Nvidia GPUs, depending on the selection of games.

While performance can be an important criteria for a lot of gamers, it’s not the only metric that matters. Our subjective rankings below factor in price, power, and features colored by our own opinions. Others may offer a slightly different take, but all of the cards on this list are worthy of your consideration.

Best Graphics Cards for Gaming 2024

Why you can trust Tom’s Hardware

Our expert reviewers spend hours testing and comparing products and services so you can choose the best for you. Find out more about how we test.

(Image credit: Tom’s Hardware)

The best overall GPU right now

Specifications

GPU: AD104

GPU Cores: 7168

Boost Clock: 2,475 MHz

Video RAM: 12GB GDDR6X 21 Gbps

TGP: 200 watts

Reasons to buy

+

Good overall performance

+

Excellent efficiency

+

DLSS, AI, AV1, and ray tracing

+

Improved value over non-Super 4070

Reasons to avoid

–

12GB is the minimum for a $400+ GPU

–

Generational price hike

–

Frame Generation marketing

–

Ugly 16-pin adapters

Nvidia just refreshed its 40-series lineup with the new Super models. Of the three, the RTX 4070 Super will be the most interesting for the most people. It inherits the same $599 MSRP as the non-Super 4070 (which has dropped to $549 to keep it relevant), with all the latest features of the Nvidia Ada Lovelace architecture. It’s slightly better than a linear boost in performance relative to price, which is as good as you can hope for these days.

Unlike some of the other models, the RTX 4070 Super also seems to have plenty of base-MSRP models available at retail. We like the stealthy black aesthetic of the Founders Edition, and it runs reasonably cool and quiet, but third-party cards with superior cooling are also available, sometimes at lower prices than the reference card.

The 4070 Super bumps core counts by over 20% compared to the vanilla 4070, and in our testing we’ve found that the general lack of changes to the memory subsystem doesn’t impact performance as much as you might expect. It’s still 16% faster overall (at 1440p), even with the same VRAM capacity and bandwidth — though helped by the 33% increase in L2 cache size.

Compared to the previous generation Ampere GPUs, even with less raw bandwidth, the 4070 Super generally matches or beats the RTX 3080 Ti, and delivers clearly superior performance than the RTX 3080. What’s truly impressive is that it can do all that while cutting power use by over 100W.

Further Reading:

Nvidia GeForce RTX 4070 Super review

AMD Radeon RX 7900 GRE — Sapphire Pulse (Image credit: Tom’s Hardware)

AMD’s best overall GPU right now

Specifications

GPU: RDNA 3 Navi 31

GPU Cores: 5120

Boost Clock: 2,245 MHz

Video RAM: 16GB GDDR6 18 Gbps

TBP: 260 watts

Reasons to buy

+

Plenty of VRAM and 256-bit interface

+

Great for 1440p and 1080p

+

Strong in rasterization testing

+

AV1 and DP2.1 support

Reasons to avoid

–

Still lacking in DXR and AI performance

–

Not as efficient as Nvidia

The Radeon RX 7900 GRE displaces the RX 7800 XT as our top AMD pick. It uses the AMD RDNA 3 architecture, but instead of the Navi 32 GPU in the 7800 XT, it sticks with the larger Navi 31 GCD and offers 33% more compute units. AMD balances that by reducing the GPU and GDDR6 clocks, though it’s possible overclocking can return some of those losses — as soon as AMD fixes the 7900 GRE overclocking ‘bug’ in its drivers. But the 7800 XT remains a close second, and that remains a good alternative here.

Both the 7900 GRE and 7800 XT offer a good blend of performance and price, but the 7900 GRE ends up about 10% faster for 10% more money. Linear performance scaling on a high-end GPU makes the more expensive card the best overall pick of Team Red’s current lineup. That’s assuming you’re like us and tend to want a great 1440p gaming experience while spending as little as possible. There are certainly faster GPUs, but the 7900 XT costs 27% more while delivering about 20% more performance, so diminishing returns kick in beyond this point.

Efficiency has been a bit hit or miss with RDNA 3, but the 7900 GRE ends up as AMD’s most efficient GPU right now, thanks to the reduced clocks. More processing clusters running at lower clocks is a good way to improve efficiency. It’s also faster than the previous generation 6950 XT while using over 60W less power. In addition, you get AV1 encoding support and DP2.1 video output, plus improved compute and AI capabilities — it’s over three times the image throughput of the 6950 XT in Stable Diffusion, for example.

AMD continues to offer more bang of the buck in rasterization games than Nvidia, though it also falls behind in ray tracing — sometimes far behind in games like Alan Wake 2 that support full path tracing. Is that something you want to try? It doesn’t radically change the gameplay, though it can look better overall. If you’re curious about fully path traced games, including future RTX Remix mods, Nvidia remains the best option. Otherwise, AMD’s rasterization performance on the 7900 GRE ends up tying the 4070 Super at 1440p, for $50 less money.

We’re also at the point where buying a new GPU that costs over $500 means we really want to see 16GB of memory. Yes, Nvidia fails in that regard, though in practice it seems as though Nvidia roughly matches AMD’s 16GB cards with 12GB offerings. There are exceptions of course, but the RX 7900 GRE still checks all the right boxes for a high-end graphics card that’s still within reach of most gamers.

Further reading:

AMD Radeon RX 7900 GRE review

AMD Radeon RX 7800 XT review

(Image credit: Tom’s Hardware)

A finely-balanced high-end Nvidia GPU

Specifications

GPU: AD104

GPU Cores: 5888

Boost Clock: 2,475 MHz

Video RAM: 12GB GDDR6X 21 Gbps

TGP: 200 watts

Reasons to buy

+

Excellent efficiency and good performance

+

Good for 1440p gaming

+

DLSS, DLSS 3, and DXR features

Reasons to avoid

–

Generational price hike

–

Frame Generation marketing

–

12GB is the minimum we’d want with a $400+ GPU

Nvidia’s RTX 4070 didn’t blow us away with extreme performance or value, but it’s generally equal to the previous generation RTX 3080, comes with the latest Ada Lovelace architecture and features, and costs about $100 less. Now, with the launch of the RTX 4070 Super (see above), it also got a further price cut and the lowest cost cards start at around $530.

Nvidia rarely goes after the true value market segment, but with the price adjustments brought about with the recent 40-series Super cards, things are at least reasonable. The RTX 4070 can still deliver on the promise of ray tracing and DLSS upscaling, it only uses 200W of power (often less), and in raw performance it outpaces AMD’s RX 7800 XT — slightly slower in rasterization, faster in ray tracing, plus it has DLSS support.

Nvidia is always keen to point out how much faster the RTX 40-series is, once you enable DLSS 3 Frame Generation. As we’ve said elsewhere, these generated frames aren’t the same as “real” frames and increase input latency. It’s not that DLSS 3 is bad, but we prefer to compare non-enhanced performance, and in terms of feel we’d say DLSS 3 improves the experience over the baseline by perhaps 10–20 percent, not the 50–100 percent you’ll see in Nvidia’s performance charts.

The choice between the RTX 4070 and the above RTX 4070 Super really comes down whether you’re willing to pay a bit more for proportionately higher performance, or if you’d rather save money. The two offer nearly the same value proposition otherwise, with the 4070 Super costing 13% more while offering 16% higher performance on average.

Further Reading:

Nvidia GeForce RTX 4070 review

(Image credit: Tom’s Hardware)

The fastest GPU — great for creators, AI, and professionals

Specifications

GPU: Ada AD102

GPU Cores: 16384

Boost Clock: 2,520 MHz

Video RAM: 24GB GDDR6X 21 Gbps

TGP: 450 watts

Reasons to buy

+

The fastest GPU, period

+

Excellent 4K and maybe even 8K gaming

+

Powerful ray tracing hardware

+

DLSS and DLSS 3

+

24GB is great for content creation workloads

Reasons to avoid

–

Extreme price and power requirements

–

Needs a fast CPU and large PSU

–

Frame Generation is a bit gimmicky

For some, the best graphics card is the fastest card, pricing be damned. Nvidia’s GeForce RTX 4090 caters to precisely this category of user. It was also the debut of Nvidia’s Ada Lovelace architecture and represents the most potent card Nvidia has to offer, possibly until 2025 when the next generation GPUs are rumored to arrive.

Note also that pricing of the RTX 4090 has become quite extreme, with most cards now selling above $2,000 thanks to the China RTX 4090 export restrictions. If you don’t already have a 4090, you’re probably best off giving it a pass now.

The RTX 4090 creates a larger gap between itself and the next closest Nvidia GPU. Across our suite of gaming benchmarks, it’s 37% faster overall than the RTX 4080 at 4K, and 33% faster than the RTX 4080 Super. It’s also 51% faster than AMD’s top performing RX 7900 XTX — though it also costs nearly twice as much online right now.

Let’s be clear about something: You really need a high refresh rate 4K monitor to get the most out of the RTX 4090. At 1440p its advantage over a 4080 shrinks to 24%, and it’s only 15% at 1080p — and that includes some demanding DXR games. The lead over the RX 7900 XTX also falls to only 32% at 1080p. Not only do you need a high resolution, high refresh rate monitor, but you’ll also want the fastest CPU possible to get the most out of the 4090.

It’s not just gaming performance, either. In professional content creation workloads like Blender, Octane, and V-Ray, the RTX 4090 is up to 45% faster than the RTX 4080. And with Blender, it’s over three times faster than the RX 7900 XTX. Don’t even get us started on artificial intelligence tasks. In Stable Diffusion testing, the RTX 4090 is also around triple the performance of the 7900 XTX for 512×512 and 768×768 images.

There are numerous other AI workloads that currently only run on Nvidia GPUs. In short, Nvidia knows a thing or two about content creation applications. The only potential problem is that it uses drivers to lock improved performance in some apps (like some of those in SPECviewperf) to its true professional cards, i.e. the RTX 6000 Ada Generation.

AMD’s RDNA 3 response to Ada Lovelace might be a better value, at least if you’re only looking at rasterization games, but for raw performance the RTX 4090 reigns as the current champion. Just keep in mind that you may also need a CPU and power supply upgrade to get the most out of the 4090.

Read: Nvidia GeForce RTX 4090 review

AMD Radeon RX 7900 XTX (Image credit: Tom’s Hardware)

AMD’s fastest GPU, great for rasterization

Specifications

GPU: Navi 31

GPU Cores: 6144

Boost Clock: 2500 MHz

Video RAM: 24GB GDDR6 20 Gbps

TBP: 355 watts

Reasons to buy

+

Great overall performance

+

Lots of VRAM and cache

+

Great for non-RT workloads

+

Good SPECviewperf results

Reasons to avoid

–

$1,000 starting price

–

Much slower RT performance

–

Weaker in AI / deep learning workloads

AMD’s Radeon RX 7900 XTX rates as the fastest graphics card from AMD, and lands near the top of the charts — with a generational price bump to match. Officially priced at $999, the least expensive models now start at around $930, and supply has basically caught up to demand. There’s good reason for the demand, as the 7900 XTX comes packing AMD’s latest RDNA 3 architecture.

That gives the 7900 XTX a lot more potential compute, and you get 33% more memory and bandwidth as well. Compared to the RX 6950 XT, on average the new GPU is 44% faster at 4K, though that shrinks to 34% at 1440p and just 27% at 1080p. It also delivers that performance boost without dramatically increasing power use or graphics card size.

AMD remains a potent solution for anyone that doesn’t care as much about ray tracing — and when you see the massive hit to performance for often relatively mild gains in image fidelity, we can understand why many feel that way. Still, the number of games with RT support continues to grow, and most of those also support Nvidia’s DLSS technology, something AMD hasn’t fully countered even if FSR2/FSR3 can at times come close. If you want the best DXR/RT experience right now, Nvidia still wins hands down.

AMD’s GPUs can also be used for professional and content creation tasks, but here things get a bit hit and miss. Certain apps in the SPECviewperf suite run great on AMD hardware, others come up short. However, if you want to do AI or deep learning research, there’s no question Nvidia’s cards are a far better pick. For this generation, the RX 7900 XTX is AMD’s fastest option, and it definitely packs a punch. If you’re willing to step down to the 7900 XT, that’s also worth considering (see below), as it tends to be priced quite a bit lower.

Further Reading:

AMD Radeon RX 7900 XTX review

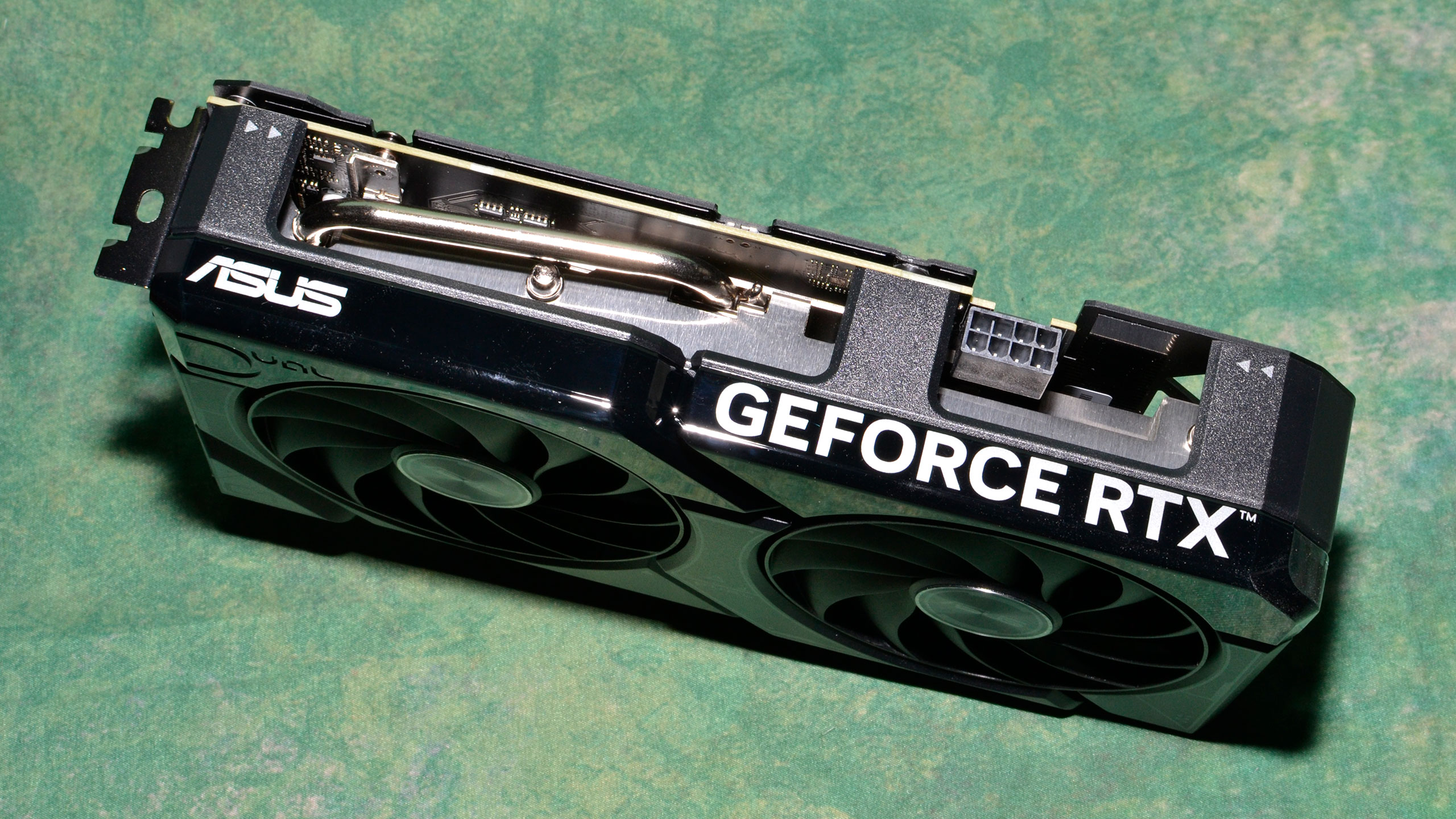

Asus GeForce RTX 4060 Dual (Image credit: Tom’s Hardware)

6. GeForce RTX 4060

The best budget Nvidia GPU

Specifications

GPU: Ada AD107

GPU Cores: 3072

Boost Clock: 2,460 MHz

Video RAM: 8GB GDDR6 17 Gbps

TGP: 115 watts

Reasons to buy

+

Great 1080p performance

+

Very efficient and quiet

+

Faster and cheaper than RTX 3060

Reasons to avoid

–

Only 8GB of VRAM

–

128-bit memory interface

–

It seems more like an RTX 4050

With the launch of the RTX 4060, Nvidia appears to have gone as low as it plans for this generation of desktop graphics cards based on the Ada Lovelace architecture. We’re not ruling out an eventual desktop RTX 4050, but we’re also skeptical such a card would warrant consideration, considering how pared down the 4060 is already.

There are certainly drawbacks with this level of GPU. Nvidia opted to cut down the memory interface to just 128 bits, which in turn limits the memory capacity options. Nvidia could do a 16GB card if it really wanted, but 8GB is the standard configuration and we don’t expect anything else — only the 4060 Ti 16GB has the doubled VRAM option, and we weren’t particularly impressed by that card. The 4060-class cards also have an x8 PCIe interface, which shouldn’t matter too much though it might reduce performance if you’re on an older platform that only supports PCIe 3.0.

The good news is that, as promised, performance is faster than the previous generation RTX 3060, by about 20% at 1080p and 1440p. There are edge cases in some games (meaning, 4K at max settings) where the 12GB on the 3060 can pull ahead, but performance is already well below the acceptable level at that point. As an example, Borderlands 3 ran at 26.5 fps on the 4060 versus 28.9 fps on the 3060 at 4K Badass settings; neither is a great experience, even though the 3060 is technically faster.

There are other benefits with the 4060 as well. You get all the latest Ada features, including DLSS 3 support. Also, the power draw is just 115W for the reference model, and typically won’t exceed 125W on overclocked cards (like the Asus Dual OC that we used for testing). Most RTX 4060 cards also don’t bother with the questionable 16-pin power connector and adapter shenanigans.

AMD’s closest alternative is the previous generation RX 6700 XT. You get the usual results: higher rasterization performance from AMD, worse ray tracing performance, and higher power requirements — about 100W more in this case. The new RX 7600 XT ostensibly competes with the 4060 as well, but it costs more and we still think the 6700 XT (or 6750 XT) are a better pick than the new 7600 XT.

An alternative view is that this is an upgraded RTX 3050, with the same 115W TGP and 60% better performance. Too bad it costs $50 extra, though the 3050 was mostly priced at $300 and above until very late in its life cycle.

Read: Nvidia GeForce RTX 4060 review

AMD Radeon RX 7900 XT reference card (Image credit: Tom’s Hardware)

AMD’s penultimate GPU, with decent pricing

Specifications

GPU: RDNA 3 Navi 31

GPU Cores: 5376 (10,752)

Boost Clock: 2,400 MHz

Video RAM: 20GB GDDR6 20 Gbps

TBP: 315 watts

Reasons to buy

+

Lots of fast VRAM

+

Now available for $150 below MSRP

+

Great for up to 4K with rasterization

Reasons to avoid

–

Still slower in DXR games

–

Much slower in AI workloads

–

The MSRP was too high at launch

With prices now heading up on many previous generation cards, AMD’s RX 7900 XT has become more attractive with time. It generally beats the RTX 4070 Ti in rasterization performance but trails by quite a bit in ray tracing games — with both cards now starting at around $699. That brings some good competition from AMD, with all the RDNA 3 architectural updates.

AMD also doesn’t skimp on VRAM, providing you with 20GB. That’s 67% more than the competing 4070 Ti. However, you won’t get DLSS support, and FSR2 works on Nvidia as well as AMD, so it’s not really an advantage (plus DLSS still looks better). Some refuse to use upscaling of any form, however, so the importance of DLSS and FSR2 can be debated.

Something else to consider is that while it’s possible to run AI workloads on AMD’s GPUs, performance can be substantially slower. That’s because the “AI Accelerators” in RDNA 3 share the same execution pipelines as the GPU shaders, and FP16 or INT8 throughput is only double the FP32 rate. That’s enough for AI inference, but it only matches a modest GPU like the RTX 3060 in pure AI number crunching. Most AI projects are also heavily invested in Nvidia’s ecosystem, which makes them easier to get running.

AMD made a lot of noise about its new innovative GPU chiplet architecture, and it could certainly prove to be a game changer… in future iterations. For now, GPU chiplets are more about saving cost than improving performance. Consider that the die sizes of AD104 and Navi 31’s GPC are similar, but AMD also has to add five MCDs and you can see why it was supposed to be the more expensive card. And yet, performance still slightly favors Nvidia’s 4070 Ti overall — and that’s before accounting for DLSS and DLSS 3.

While the RX 7900 XT is good and offers a clear performance upgrade over the prior generation, you may also want to consider the above RX 7800 XT. It costs $200 less (a 29% decrease) and performance is only about 20% lower. Unfortunately, overall generational pricing has increased, with new product names to obfuscate that fact. If the 7900 XTX replaced the 6900 XT, then logically this represents a 6800 XT replacement, but that’s not the way AMD decided to name things.

Read: AMD Radeon RX 7900 XT review

Intel Arc A750 Limited Edition, now officially discontinued (Image credit: Tom’s Hardware)

Team Blue’s budget-friendly GPU, an excellent value

Specifications

GPU: ACM-G10

GPU Cores: 3584

Boost Clock: 2,400 MHz

Video RAM: 8GB GDDR6 16 Gbps

TDP: 225 watts

Reasons to buy

+

Great value at current prices

+

Excellent video codec support

+

Good overall performance

Reasons to avoid

–

Needs modern PC with ReBAR support

–

Not particularly efficient

–

Driver issues can still occur

Testing the Intel Arc A750 was a bit like dealing with Dr. Jekyll and Mr. Hyde. At times, performance looked excellent, sometimes surpassing the GeForce RTX 3060. Other times, Arc came up far short of expectations, trailing the RTX 3050. The drivers continued to improve, however, and with prices now starting at around $200, this represents an excellent value — just note that some of the cost savings will ultimately show up in your electrical bill, as it’s not as efficient as the competition.

There are some compromises, like the 8GB of VRAM — the A770 doubles that to 16GB, but also costs around $100 extra. Intel’s A750 also has to go up against AMD’s RX 7600, which is the primary competition at this price (even if AMD’s prices have recently increased). Depending on the game, performance may end up favoring one or the other, though Intel now holds the overall edge by a scant 2% at 1080p ultra. Like Nvidia GPUs, ray tracing games tend to favor Intel, while rasterization games are more in the AMD camp.

Intel was the first company to deliver hardware accelerated AV1 encoding and decoding support, and QuickSync continues to deliver an excellent blend of encoding performance and quality. There’s also XeSS, basically a direct competitor to Nvidia’s DLSS, except it uses Arc’s Matrix cores when present, and can even fall back into DP4a mode for non-Arc GPUs. But DLSS 2 still comes out on top, and it’s in far more games.

The Arc A750 isn’t a knockout blow, by any stretch, but it’s also nice to have a third player in the GPU arena. The A750 competes with the RTX 3060 and leaves us looking forward to Intel’s future Arc Battlemage GPUs, which could conceivably arrive later this year. You can also check out the Arc A770 16GB, if you’re willing to give Intel a chance, though it’s a steep upsell these days.

Further Reading:

Intel Arc A750 Limited Edition review

Intel Arc A770 Limited Edition review

Nvidia GeForce RTX 4080 Super Founders Edition (Image credit: Tom’s Hardware)

Nvidia’s penultimate GPU, with a speed bump and a theoretical price cut

Specifications

GPU: AD103

GPU Cores: 10240

Boost Clock: 2,550 MHz

Video RAM: 16GB GDDR6 23 Gbps

TGP: 320 watts

Reasons to buy

+

Good efficiency and architecture

+

Second fastest GPU overall

+

Handles 4K ultra, with DLSS 3

Reasons to avoid

–

Still a large step down from RTX 4090

–

Only 16GB and a 256-bit interface

–

Barely faster than RTX 4080

–

High retail prices right now

The RTX 4080 Super at least partially addresses one of the biggest problems with the original RTX 4080: the price. The 4080 cost $1,199 at launch, a 72% increase in generational pricing compared to the RTX 3080. Now, the 4080 Super refresh drops the price to $999, which represents a 17% drop in generational pricing compared to the (overpriced) RTX 3080 Ti. Of course, the 3080 Ti felt overpriced, but it nearly matched the 3090 in performance, just with half the VRAM. The 4080 Super still trails the 4090 by a sizeable margin of 24% at 4K.

With the current price premiums on the RTX 4090, likely caused by AI companies buying up consumer GPUs, we’re also seeing increased pricing on the RTX 4080 Super — it costs about $200 over MSRP for the cheapest cards right now. <Insert annoyed sigh.> It’s still $600 less than the 4090, but often about the same price as the RTX 4080, while the latter still has cards for purchase.

On paper at least, the 4080 Super is certainly better than the now discontinued vanilla 4080, even if the upgrades are relatively minor. The 4080 Super increases core counts by 5%, GPU clocks by 2%, and VRAM clocks by 3%, which works out to a net 3% increase in performance. Faster and less expensive would be moving in the right direction, if cards were available at MSRP.

Nvidia also goes directly after AMD’s top GPU with the 4080 Super, and with the price reduction it becomes a far more compelling choice. Overall, the 4080 Super leads the 7900 XTX by 14–16 percent at 4K and 1440p, and even if we only look at rasterization performance, it’s basically tied or slightly faster than AMD’s best. Paying $60 extra for Nvidia’s faster and more efficient card, plus getting the other Nvidia extras, should be a relatively easy decision unless you absolutely refuse to consider buying an Nvidia GPU.

It’s not all smooth sailing for the 4080 Super, given the current retail availability and prices. Our advice is to only pick up a card if it costs closer to $1,000. Otherwise, there are better options. Predicting when or if prices will drop unfortunately goes beyond the capabilities of my crystal ball.

Further Reading:

Nvidia RTX 4080 Super review

Nvidia RTX 4080 review

(Image credit: Tom’s Hardware)

AMD’s budget GPU — avoid ray tracing

Specifications

GPU: Navi 33

GPU Cores: 2048

Boost Clock: 2,625 MHz

Video RAM: 8GB GDDR6 18 Gbps

TBP: 165 watts

Reasons to buy

+

1080p max settings and 60fps for rasterization

+

AV1 and DP2.1 support

+

Decent overall value

Reasons to avoid

–

Not good for ray tracing

–

Only 8GB VRAM

–

Basically a sidegrade from RX 6650 XT

The Radeon RX 7600 and its Navi 33 GPU have replaced the previous generation Navi 23 parts, mostly as a sideways move with a few extra features. Performance ends up just slightly faster than the RX 6650 XT, while using about 20W less power, for about $20 extra. The extras include superior AI performance (if that matters to you), AV1 encoding and decoding hardware, and DisplayPort 2.1 UHBR13.5 outputs.

Prices dropped about $20 when the RX 7600 first appeared, but have crept back up to $259 at present. The previous generation RX 6600-class GPUs have generally kept pace on pricing changes. Specs are similar as well, with the same 8GB GDDR6 and a 128-bit memory interface. Both the 6650 XT and RX 7600 also use pretty much the same 18 Gbps memory (the 6650 XT is clocked at 17.5 Gbps), so bandwidth remains nearly the same.

Across our test suite of 15 games, the RX 7600 outperformed the RX 6650 XT by 6% overall at 1080p, and just 3% at 1440p ultra. There really is almost no discernable difference in gaming performance. The RX 7600 does substantially better in AI workloads like Stable Diffusion, however — not as fast as Nvidia’s 4060, but far more competitive than the RX 6650 XT when using the latest projects.

Price-wise, there’s not really a direct Nvidia competitor. The RTX 4060 costs $30–$50 more, and while the RTX 3050 costs about $40 less, it’s also a far less capable GPU. AMD gets an easy win over the anemic 3050, delivering 40% more performance, but we never really liked that card. The RTX 4060 meanwhile offers roughly 25% more performance for 12% more money.

Buy the RX 7600 if you’re mostly looking for acceptable performance and efficiency at the lowest price possible, and you don’t want to deal with Intel’s drivers and don’t care about ray tracing. Otherwise, give the Arc A750 and RTX 4060 a thought. The RX 6600 also warrants a look, depending on how low you want to go on pricing — that card often sells for less than $200 now, though performance ends up being quite a bit lower than the RX 7600 (22% slower across our test suite).

There’s also the new RX 7600 XT, which doubles the VRAM to 16GB and gives a minor boost to GPU clocks, with an increase in power use as well. It’s about 10% faster than the vanilla 7600 overall, but costs 27% more — rather like the RTX 4060 Ti 16GB in that regard.

Further reading:

AMD Radeon RX 7600 review

AMD Radeon RX 7600 XT review

Sapphire Radeon RX 7700 XT Pure (Image credit: Tom’s Hardware)

Good performance at a reasonable price

Specifications

GPU: Navi 32

GPU Cores: 3456

Boost Clock: 2544 MHz

Video RAM: 12GB GDDR6 18 Gbps

TBP: 230 watts

Reasons to buy

+

Good 1440p and 1080p performance

+

Excellent value for rasterization

+

Latest AMD features like AV1 encoding

+

Official price cut to $419

Reasons to avoid

–

Weaker RT performance

–

FSR2 can’t beat DLSS

–

Only slightly faster than RX 6800

After the successful RX 6700 XT and RX 6750 XT, we had high hopes for the RX 7700 XT. It only partially delivers, though a recent official price cut definitely helps. AMD’s latest generation midrange/high-end offering follows the usual path, trimming specs compared to the top models with a smaller Navi 32 GPU that can sell at lower prices. Both the RX 7800 XT (above) and 7700 XT use the same Navi 32 GCD (Graphics Compute Die), but the 7800 XT has four MCDs (Memory Cache Dies) while the 7700 XT cuts that number down to three, as well as having fewer CUs (Compute Units) enabled on the GCD.

The result of the cuts is that the RX 7700 XT ends up around 14% slower than the 7800 XT, and currently costs 14% less as well — linear scaling of price and performance, in other words. We like the 7800 XT and 7900 GRE more, which is why it’s higher up on this list, but the 7700 XT still fills a niche. Basically, any of these three AMD GPUs warrants consideration, depending on your budget.

The closest competition from Nvidia comes in the form of the RTX 4060 Ti 16GB, which has nearly the same starting retail price these days ($420, give or take). AMD’s 7700 XT easily wins in rasterization performance, beating Nvidia’s GPU by up to 21%. Nvidia still keeps the ray tracing crown with a 10% lead, but DXR becomes less of a factor as we go down the performance ladder. However, Nvidia also has a sizeable lead in power use, averaging about 80W less power consumption.

Compared to the previous generation RX 6700 XT, the 7700 XT delivers around 30–35 percent more performance overall, and costs about 26% more. That makes it the better choice, assuming your budget will stretch to $420, and it comes with the other upgrades of RDNA 3 — AV1, DP2.1, improved efficiency, and better AI performance.

Further reading:

AMD Radeon RX 7700 XT review

AMD Radeon RX 7800 XT review

Nvidia GeForce RTX 4060 Ti Founders Edition (Image credit: Tom’s Hardware)

Mainstream Nvidia Ada for under $400

Specifications

GPU: AD106

GPU Cores: 4352

Boost Clock: 2,535 MHz

Video RAM: 8GB GDDR6 18 Gbps

TGP: 160 watts

Reasons to buy

+

Great efficiency

+

Latest Nvidia architecture

+

Generally faster than 3060 Ti

Reasons to avoid

–

8GB and 128-bit bus for $399?

–

Less VRAM than RTX 3060

–

DLSS 3 is no magic bullet

What is this, 2016? Nvidia’s RTX 4060 Ti is a $399 graphics card, with only 8GB of memory and a 128-bit interface — or alternatively, the 4060 Ti 16GB doubles the VRAM on the same 128-bit interface, for about $55 extra. We thought we had left 8GB cards in the past after the RTX 3060 gave us 12GB, but Nvidia seems more intent on cost-cutting and market segmentation these days. But the RTX 4060 Ti does technically beat the previous generation RTX 3060 Ti, by 10–15 percent overall in our testing.

There are plenty of reasons to waffle on this one. The larger L2 cache does mostly overcome the limited bandwidth from the 128-bit interface, but cache hit rates go down as resolution increases, meaning 1440p and especially 4K can be problematic. At least the price is the same as the outgoing RTX 3060 Ti, street prices start at $375 right now, and you do get some new features. But the 8GB still feels incredibly stingy, and the 16GB model mostly helps at 4K ultra, at which point the performance still tends to be poor.

There’s not nearly as much competition in the mainstream price segment as we would expect. AMD’s RX 7700 XT (above) is technically up to 10% faster than the 4060 Ti overall, but it costs about 15% more. Alternatively, it’s also 8% faster than the 16GB variant for basically the same price, but it’s the usual mix of winning in rasterization and losing in ray tracing. AMD’s next step down is either the 7600 XT, which trails the 4060 Ti by 25–30 percent in performance, or the previous generation RX 6700 XT / 6750 XT, which are still about 15% slower for 10% less money.

Looking at performance, the 4060 Ti generally manages 1440p ultra at 60 fps in rasterization games, but for ray tracing you’ll want to stick with 1080p — or use DLSS. Frame Generation is heavily used in Nvidia’s marketing materials, and it can provide a significant bump to your fps. However, it’s more of a frame smoothing technique as it interpolates between two frames and doesn’t apply any new user input to the generated frame.

Ultimately, the 4060 Ti — both 8GB and 16GB variants — are worth considering, but there are better picks elsewhere in our opinion. There’s a reason this ranks down near the bottom of our list: It’s definitely serviceable, but there are plenty of compromises if you go this route.

Further Reading:

Nvidia RTX 4060 Ti review

Nvidia RTX 4060 Ti 16GB review

Intel Arc A380 by Gunnir (Image credit: Gunnir)

A true budget GPU for as little as $99

Specifications

GPU: ACM-G11

GPU Cores: 1024

Boost Clock: 2,400 MHz

Video RAM: 6GB GDDR6 15.5 Gbps

TGP: 75 watts

Reasons to buy

+

Great video encoding quality

+

True budget pricing

+

Generally does fine at 1080p medium

Reasons to avoid

–

Not particularly fast or efficient

–

Still has occasional driver woes

–

Requires 8-pin power connector

–

Wait for a sale price (<$100)

The Arc A380 perhaps best represents Intel’s dedicated GPU journey over the past year. Our initial review found a lot of problems, including game incompatibility, rendering errors, and sometimes downright awful performance. More than a year of driver updates has fixed most of those problems, and if you’re looking for a potentially interesting HTPC graphics card, video codec support remains a strong point.

We’ve routinely seen the Arc A380 on sale for $99, and at that point, we can forgive a lot of its faults. We suggest you hold out for another “sale” if you want to pick one up, rather than spending more — the current least expensive cards start at $119. It’s still very much a budget GPU, with slower performance than the RX 6500 XT, but it has 6GB of VRAM and costs less.

The Intel Arc Alchemist architecture was the first to add AV1 encoding and decoding support, and the quality of the encodes ranks right up there with Nvidia Ada, with AMD RDNA 3 trailing by a decent margin. And the A380 is just as fast at encoding as the beefier A770. Note also that we’re reporting boost clocks, as in our experience that’s where the GPU runs: Across our full test suite, the A380 averaged 2449 MHz on the Gunnir card that comes with a 50 MHz factory overclock. Intel’s “2050 MHz Game Clock” is very conservative, in other words.

Make no mistake that you will give up a lot of performance in getting down to this price. The A380 and RX 6500 XT trade blows in performance, but the RX 6600 ends up roughly twice as fast. It doesn’t have AV1 support, though, and costs closer to $200. Ray tracing is also mostly a feature checkbox here, with performance in every one of our DXR tests falling below 30 fps, with the exception of Metro Exodus Enhanced that averaged 40 fps at 1080p medium.

Arc A380 performance might not be great, but it’s still a step up from integrated graphics solutions. Just don’t add an Arc card to an older PC, as it really needs ReBAR (Resizable Base Address Register) support and ideally a PCIe 4.0 slot.

Read: Intel Arc A380 review

How We Test the Best Graphics Cards

Tom’s Hardware 2024 GPU Testbed

Determining pure graphics card performance is best done by eliminating all other bottlenecks — as much as possible, at least. Our 2024 graphics card testbed consists of a Core i9-13900K CPU, MSI Z790 MEG Ace DDR5 motherboard, 32GB G.Skill DDR5-6600 CL34 memory, and a Sabrent Rocket 4 Plus-G 4TB SSD, with a be quiet! 80 Plus Titanium PSU and a Cooler Master CPU cooler.

We test across the three most common gaming resolutions, 1080p, 1440p, and 4K, using ‘medium’ and ‘ultra’ settings at 1080p and ‘ultra’ at 4K. Where possible, we use ‘reference’ cards for all of these tests, like Nvidia’s Founders Edition models and AMD’s reference designs. Most midrange and lower GPUs do not have reference models, however, and in some cases we only have factory overclocked cards for testing. We do our best to select cards that are close to the reference specs in such cases.

For each graphics card, we follow the same testing procedure. We run one pass of each benchmark to “warm up” the GPU after launching the game, then run at least two passes at each setting/resolution combination. If the two runs are basically identical (within 0.5% or less difference), we use the faster of the two runs. If there’s more than a small difference, we run the test at least twice more to determine what “normal” performance is supposed to be.

We also look at all the data and check for anomalies, so for example RTX 3070 Ti, RTX 3070, and RTX 3060 Ti all generally going to perform within a narrow range — 3070 Ti is about 5% faster than 3070, which is about 5% faster than 3060 Ti. If we see games where there are clear outliers (i.e. performance is more than 10% higher for the cards just mentioned), we’ll go back and retest whatever cards are showing the anomaly and figure out what the “correct” result should be.

Due to the length of time required for testing each GPU, updated drivers and game patches inevitably come out that can impact performance. We periodically retest a few sample cards to verify our results are still valid, and if not, we go through and retest the affected game(s) and GPU(s). We may also add games to our test suite over the coming year, if one comes out that is popular and conducive to testing — see our what makes a good game benchmark for our selection criteria.

Graphics Cards Performance Results

Our updated test suite of games consists of 15 titles. The data in the following charts is from testing conducted during the past several months. Only the fastest cards are tested at 1440p and 4K, but we do our best to test everything at 1080p medium and ultra.

For each resolution and setting, the first chart shows the geometric mean (i.e. equal weighting) for all 15 games. The second chart shows performance in the nine rasterization games, and the third chart focuses in on ray tracing performance in six games. Then we have the 15 individual game charts, for those who like to see all the data.

AMD’s FSR has now been out for over two years now, with FSR 2.0 now having surpassed the year mark. Nvidia’s DLSS 2 has been around since mid-2019, while Intel’s XeSS formally launched in October 2023. Twelve of the games in our test suite support DLSS 2, five more support DLSS 3, five now support FSR2, and four support XeSS. However, we’re running all of the benchmarks at native resolution for these tests. We have a separate article looking at FSR and DLSS, and the bottom line is that DLSS and XeSS improve performance with less compromise to image quality, but FSR2 works on any GPU.

The charts below contain nearly all of the current RTX 40/30-series, RX 7000/6000-series, and Intel’s Arc A-series graphics cards. Our GPU benchmarks hierarchy contains additional results for those who are interested. The charts are color coded with AMD in red, Nvidia in blue, and Intel in gray to make it easier to see what’s going on.

The following charts are up to date as of February 14, 2024. Nearly all current generation and previous generation GPUs are included.

Best Graphics Cards — 1080p Medium

Image 1 of 18

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

Best Graphics Cards — 1080p Ultra

Image 1 of 18

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

Best Graphics Cards — 1440p Ultra

Image 1 of 18

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

Best Graphics Cards — 4K Ultra

Image 1 of 18

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

Power, Clocks, and Temperatures

Most of our discussion has focused on performance, but for those interested in power and other aspects of the GPUs, here are the appropriate charts.

Image 1 of 4

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

Image 1 of 4

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

Image 1 of 4

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

(Image credit: Tom’s Hardware)

Choosing Among the Best Graphics Cards

We’ve provided a baker’s dozen of choices for the best graphics cards, recognizing that there’s plenty of potential overlap. The latest generation GPUs consist of Nvidia’s Ada Lovelace architecture, which improves on the previous Ampere architecture. AMD’s RDNA3 architecture likewise takes over from the previous RDNA2 architecture offerings. Finally, Intel Arc Alchemist GPUs provide some competition in the budget and midrange sectors.

Conveniently, Arc Alchemist, RDNA2/3, and Ada/Ampere all support the same general features (DirectX 12 Ultimate and ray tracing), though Arc and RTX cards also have additional tensor core hardware.

We’ve listed the best graphics cards that are available right now, along with their current online prices, which we track in our GPU prices guide. With many cards now selling below MSRP, plenty of people seem ready to upgrade, and supply also looks good in general (with the exception of the 4080 and 4090 cards). Our advice: Don’t pay more today for yesterday’s hardware. The RTX 40-series and RX 7000-series offer better features and more bang for the buck, unless you buy a used previous generation card.

If your main goal is gaming, you can’t forget about the CPU. Getting the best possible gaming GPU won’t help you much if your CPU is underpowered and/or out of date. So be sure to check out the Best CPUs for Gaming page, as well as our CPU Benchmark hierarchy to make sure you have the right CPU for the level of gaming you’re looking to achieve.

Our current recommendations reflect the changing GPU market, factoring in all of the above details. The GPUs are ordered using subjective rankings, taking into account performance, price, features, and efficiency, so slower cards may end up higher on our list.

When buying a graphics card, consider the following:

• Resolution: The more pixels you’re pushing, the more performance you need. You don’t need a top-of-the-line GPU to game at 1080p.

• PSU: Make sure that your power supply has enough juice and the right 6-, 8- and/or 16-pin connector(s). For example, Nvidia recommends a 550-watt PSU for the RTX 3060, and you’ll need at least an 8-pin connector and possibly a 6-pin PEG connector as well. Newer RTX 40-series GPUs use 16-pin connectors, though all of them also include the necessary 8-pin to 16-pin adapters.

• Video Memory: A 4GB card is the absolute minimum right now, 6GB models are better, and 8GB or more is strongly recommended. A few games can now use 12GB of VRAM, though they’re still the exception rather than the rule.

• FreeSync or G-Sync? Either variable refresh rate technology will synchronize your GPU’s frame rate with your screen’s refresh rate. Nvidia supports G-Sync and G-Sync Compatible displays (for recommendations, see our Best Gaming Monitors list), while AMD’s FreeSync tech works with Radeon cards.

• Ray Tracing and Upscaling: The latest graphics cards support ray tracing, which can be used to enhance the visuals. DLSS provides intelligent upscaling and anti-aliasing to boost performance with similar image quality, but it’s only on Nvidia RTX cards. AMD’s FSR works on virtually any GPU and also provides upscaling and enhancement, but on a different subset of games. New to the party are DLSS 3 with Frame Generation and FSR 3 Frame Generation, along with Intel XeSS, with yet another different subset of supported games — DLSS 3 also provides DLSS 2 support for non 40-series RTX GPUs.

Finding Discounts on the Best Graphics Cards

With the GPU shortages mostly over, you might find some particularly tasty deals on occasion. Check out the latest Newegg promo codes, Best Buy promo codes and Micro Center coupon codes.

Want to comment on our best graphics picks for gaming? Let us know what you think in the Tom’s Hardware Forums.

MORE: HDMI vs. DisplayPort: Which Is Better For Gaming?

MORE: GPU Benchmarks and Hierarchy